I am a master’s student at Harbin Institute of Technology (Shenzhen). Prior to this, I obtained my bachelor’s degree from the same institution.

🤔 Research Interests

- Multimodal: Multimodal Large Language Models

- Efficient Training and Inference: Visual Token Reduction, Continual Learning, Model Compression

🔥 News

- 2026.02: One paper on efficient training for MLLMs is out on arXiv, see here.

- 2025.10: Honored to join LONG Group at HKUST as a visiting student, working with Prof. Long Chen.

- 2025.08: One paper on continual learning for MLLMs is accepted by EMNLP 2025 main, see here.

- 2025.08: One Zhihu blog on Mechanism of MLLMs is forwarded by PaperWeekly.

- 2024.04: One paper on compressing diffusion models is out on arXiv, see here.

- 2023.09: Excited to be a Research Intern at OPPO AI Center.

📝 Publications

arXiv

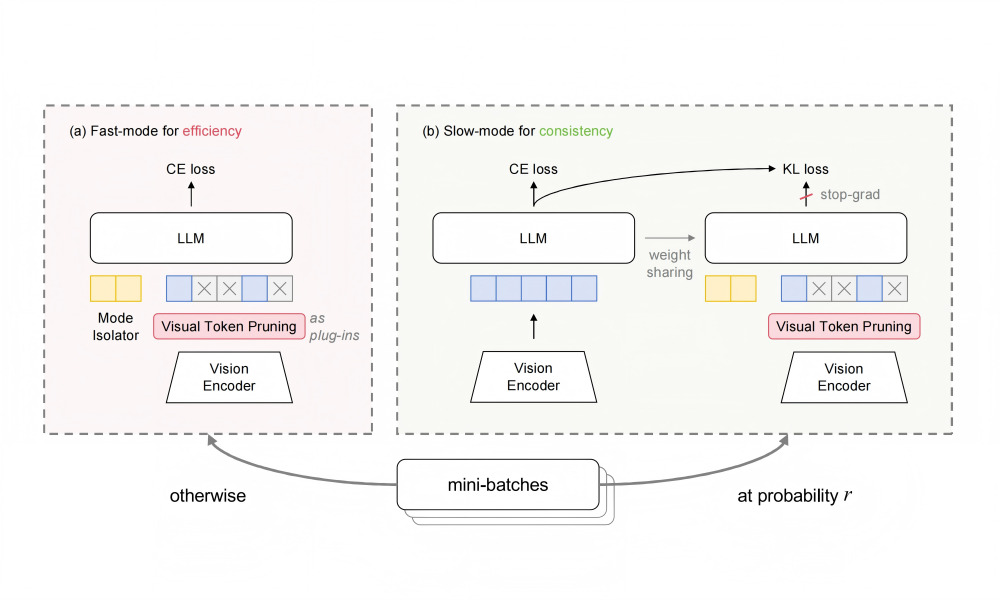

Fast-Slow Efficient Training for Multimodal Large Language Models via Visual Token Pruning

Dingkun Zhang, Shuhan Qi, Yulin Wu, Xinyu Xiao, Xuan Wang, Long Chen

- A framework to accelerate MLLMs training via visual token pruning.

- Overcome the training-inference mismatch when introducing visual token pruning to training time.

EMNLP 2025 main

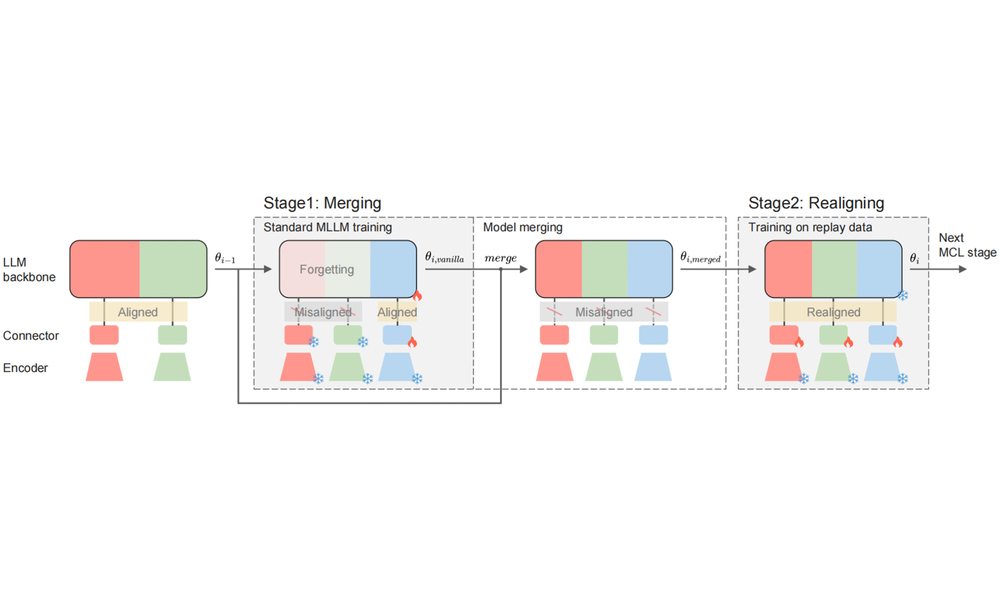

Merge then Realign: Simple and Effective Modality-Incremental Continual Learning for Multimodal LLMs

Dingkun Zhang, Shuhan Qi, Xinyu Xiao, Kehai Chen, Xuan Wang

- Extend existing MLLMs to more modalities efficiently.

- Minimalist, Anti-Catastrophic Forgetting.

arXiv

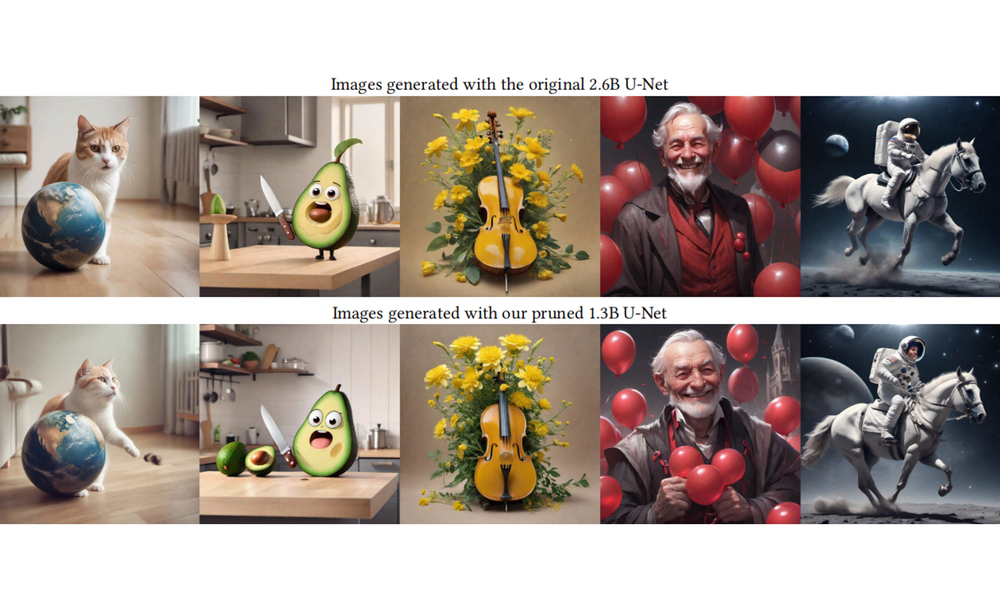

LAPTOP-Diff: Layer Pruning and Normalized Distillation for Compressing Diffusion Models

Dingkun Zhang*, Sijia Li*, Chen Chen, Qingsong Xie, Haonan Lu

- Compress diffusion models through layer pruning and knowledge distillation.

📖 Educations

- 2024.06 - present, Master’s Student, Harbin Institute of Technology (Shenzhen).

- 2020.09 - 2024.06, Undergraduate Student, Harbin Institute of Technology (Shenzhen).

💻 Internships

- 2025.10 - 2026.02, Visiting Student, Hong Kong University of Science and Technology.

- 2023.09 - 2024.04, Research Intern, OPPO AI Center.